Understanding Machine Learning Pipeline

A machine learning pipeline transforms raw inputs into reliable, production-ready models through distinct and clear stages

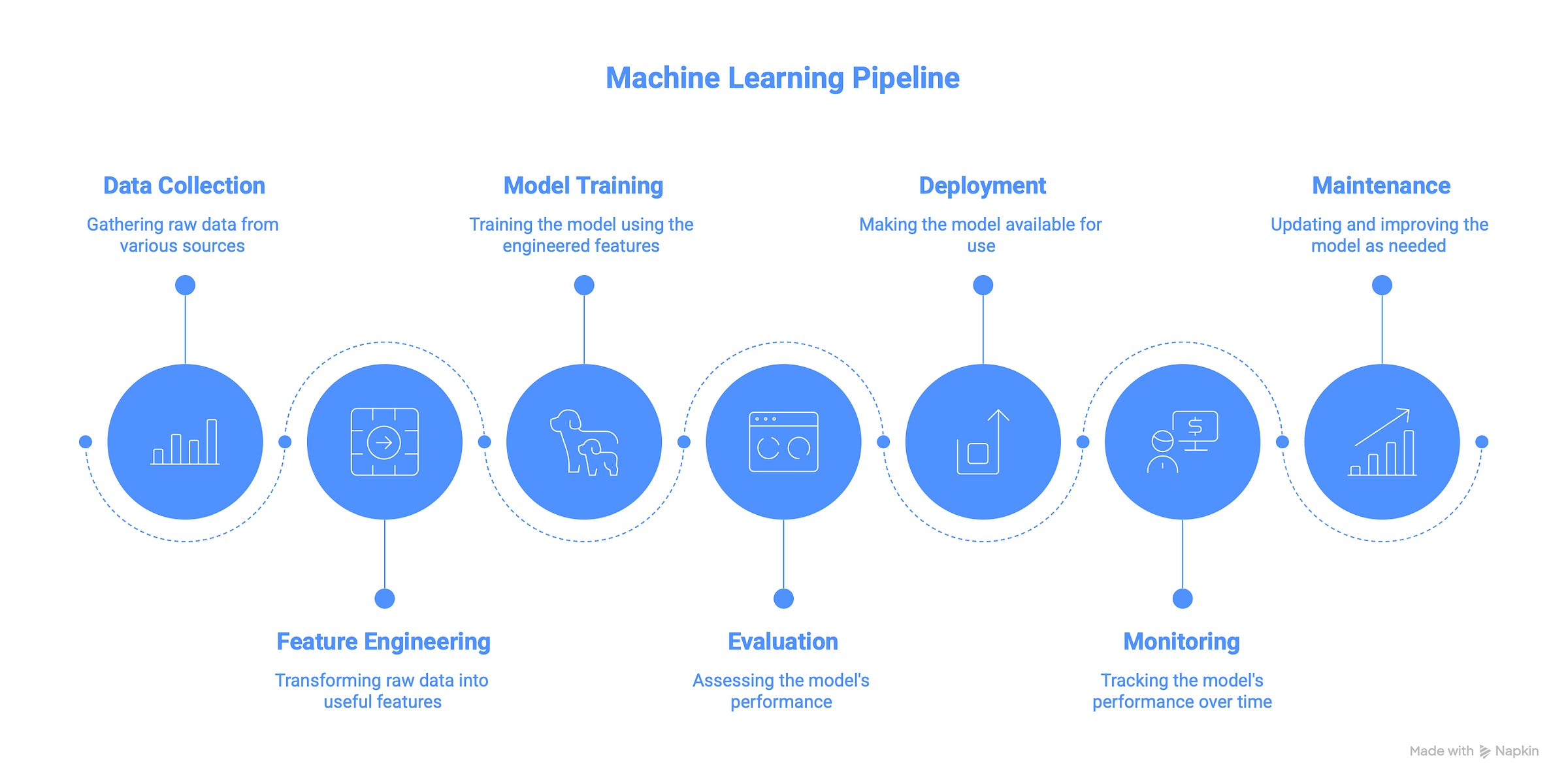

A machine learning pipeline transforms raw inputs into reliable, production-ready models through distinct and clear stages. Each phase adds structure and checks, from gathering data to keeping a live model healthy. This guide walks you through Data Collection, Feature Engineering, Model Training, Evaluation, Deployment, Monitoring, and Maintenance in straightforward terms.

The purpose of this post is to provide readers with a mental map for any ML project. Let’s dive in.

1. Data Collection

The process begins with gathering relevant data from various sources. These might include databases, logs, CSV files, APIs, and sensor feeds. It’s crucial to define what is needed before pulling in every field. Objectives and data schema should be sketched out early to save time in later stages. Privacy rules and security policies must be respected during record ingestion. Each dataset should be tagged with metadata that captures when, where, and how it arrived. This context supports traceability and reproducibility in analysis and modeling. A solid foundation of clean, well-understood inputs reduces surprises down the road.

2. Feature Engineering

Raw attributes rarely arrive in the shape and scale a model requires. Feature engineering transforms these inputs into meaningful variables. Numeric fields might be normalized, categorical ones encoded, or parts of timestamps extracted. Domain expertise helps craft interaction terms or rolling averages for time-series data. For text tasks, tokenization or embeddings convert words into numbers. Techniques like PCA or feature selection help manage high dimensionality. Each crafted feature should carry a clear rationale about its role in prediction. Well-engineered features often boost performance more than complex algorithms.

3. Model Training

With features in place, a model is trained to learn underlying patterns. The process starts by selecting an algorithm family—linear models, decision trees, or neural networks—based on the problem. Data is split into training and validation sets to catch overfitting before it sneaks in. Hyperparameter tuning is automated with grid search, random search, or Bayesian methods. Training metrics like loss curves and convergence behavior are monitored. Each experiment’s configuration and results are tracked with an experiment management tool. Automating repeatable training pipelines prevents manual errors and ensures reproducibility. A robust training loop lays the groundwork for reliable predictions.

4. Evaluation

Evaluation measures how well a model performs on unseen data. Metrics should align with real-world goals—accuracy, precision/recall, RMSE, AUC, or custom business KPIs. A hold-out test set that never touched training or tuning is reserved to avoid leakage. Performance is visualized with confusion matrices or ROC curves to spot strengths and weaknesses. Error cases are drilled into to understand model blind spots. Bias is checked by comparing results across different subgroups. Findings are documented clearly to guide stakeholders on strengths and limitations. Thorough validation builds confidence before production rollout.

5. Deployment

Deployment turns a validated model into a usable service or batch task. The model is containerized with Docker or packaged for serverless platforms. Inference logic is wrapped in a simple API or integrated into an existing data pipeline. Model artifacts and API definitions are version-controlled to track changes over time. Deployment pipelines are automated to eliminate manual hand-offs and reduce errors. Endpoints are load-tested to verify they meet latency and throughput requirements. Fallback logic or circuit breakers are included to handle unexpected failures gracefully. Smooth, repeatable deployment processes make going live painless and predictable.

6. Monitoring

Once in production, a model enters a dynamic environment that can drift over time. Input feature distributions are monitored to catch covariate drift early. Performance metrics are tracked against expected baselines or business KPIs continuously. Logs are instrumented for latency, error rates, and resource usage. Alerts are set to trigger when metrics deviate beyond safe thresholds. Diagnostic dashboards are used to spot anomalies at a glance. Regular health checks ensure the service remains stable under varying loads.

7. Maintenance

Maintenance keeps a model effective as data and requirements evolve. Over time, input distributions shift, feature relevance changes, and new business rules emerge. A proactive maintenance plan ensures the pipeline doesn’t silently degrade.

A retraining cadence is established based on data volume and observed drift. Every model artifact—training code, feature transformations, and weights—is versioned to allow rollback or comparison of historic performance.

Tests are automated to validate feature pipelines and inference logic after updates. New data sources or labels are incorporated when business needs shift, then their impact is validated through the evaluation suite. Obsolete features are rotated out and their effect on performance monitored to prevent bloat.

Wrapping Up

A robust machine learning pipeline weaves together data collection, feature engineering, model training, evaluation, deployment, monitoring, and maintenance. Each stage builds on the last to create a transparent, reproducible path from raw inputs to reliable predictions. Skipping any phase invites hidden bias, untraceable errors, and brittle systems.

When this end-to-end flow is followed; the right data is captured with clear ownership. That data is distilled into features that carry meaningful signals. Models are trained under controlled conditions and validated on truly unseen cases. Deployment is handled with repeatable pipelines, version control, and safety nets. Monitoring is continuous, adapting to real-world drift rather than hoping nothing breaks.

With this structure in place, Engineers can iterate on features or algorithms the team can focus on building next-generation capabilities. More importantly, Stakeholders can see clear metrics that connect model outputs to business impact.