Understanding the Nature of Data Around Us # 2

Schema, Statistical Analysis & Pattern Recognition

In Part 1, we established that data is the new oil requiring systematic exploration, and we discovered the three fundamental structures of information. Now let's understand how to organize this raw material and extract its intelligence through systematic analysis.

The patterns we seek don't emerge by accident—they require deliberate frameworks and scientific approaches to surface and validate.

The Blueprint for Pattern Recognition

Schema functions as an architectural blueprint that creates structured frameworks for data analysis. It provides a controlled framework necessary for consistent pattern recognition and replication. It's the difference between random observation and systematic discovery.

The Conceptual Foundation

Schema is the scientific method applied to data organization. It creates systematic classification frameworks that reveal underlying patterns in a data system.

Core Principles:

Standardization: Ensure consistent measurement and categorization

Validation: Provide quality control for pattern reliability

Relationships: Define how different data elements connect and influence each other

Evolution: Allow systematic growth and refinement over time

The Pattern Framework Components

To illustrate these principles in practice, consider a simple experiment tracking schema:

CREATE TABLE ExperimentResults (

experiment_id INT PRIMARY KEY, -- Unique pattern identifier

hypothesis VARCHAR(500) NOT NULL, -- Pattern prediction

measurement_value DECIMAL(10,4), -- Quantified observation

confidence_level DECIMAL(3,2), -- Pattern certainty (0.00-1.00)

observation_date TIMESTAMP -- Temporal pattern marker

);

Schema validation operates through systematic constraint rules that ensure pattern reliability and scientific rigor. Measurement values must fall within established scientific ranges, while confidence levels represent statistical significance thresholds. Temporal markers enable time-series pattern analysis, while researcher notes preserve observational context.

Beyond individual data integrity, schema defines relationship patterns between different elements, creating interconnected networks that mirror complex interdependencies found in natural systems. Well-designed schemas capture the web of connections that exist in real-world phenomena, enabling analysts to discover systematic relationships that drive meaningful insights.

Advanced Schema Architectures

Modern data analysis requires hierarchical pattern recognition that operates simultaneously across multiple levels of abstraction. This multi-layered approach enables organizations to understand both granular individual behaviors and macro-level market dynamics within a single analytical framework.

The power of this approach lies in its ability to identify cross-level relationships. Individual preference evolution patterns can aggregate to reveal market-wide trend shifts, while competitive positioning data can help explain individual customer lifecycle transitions.

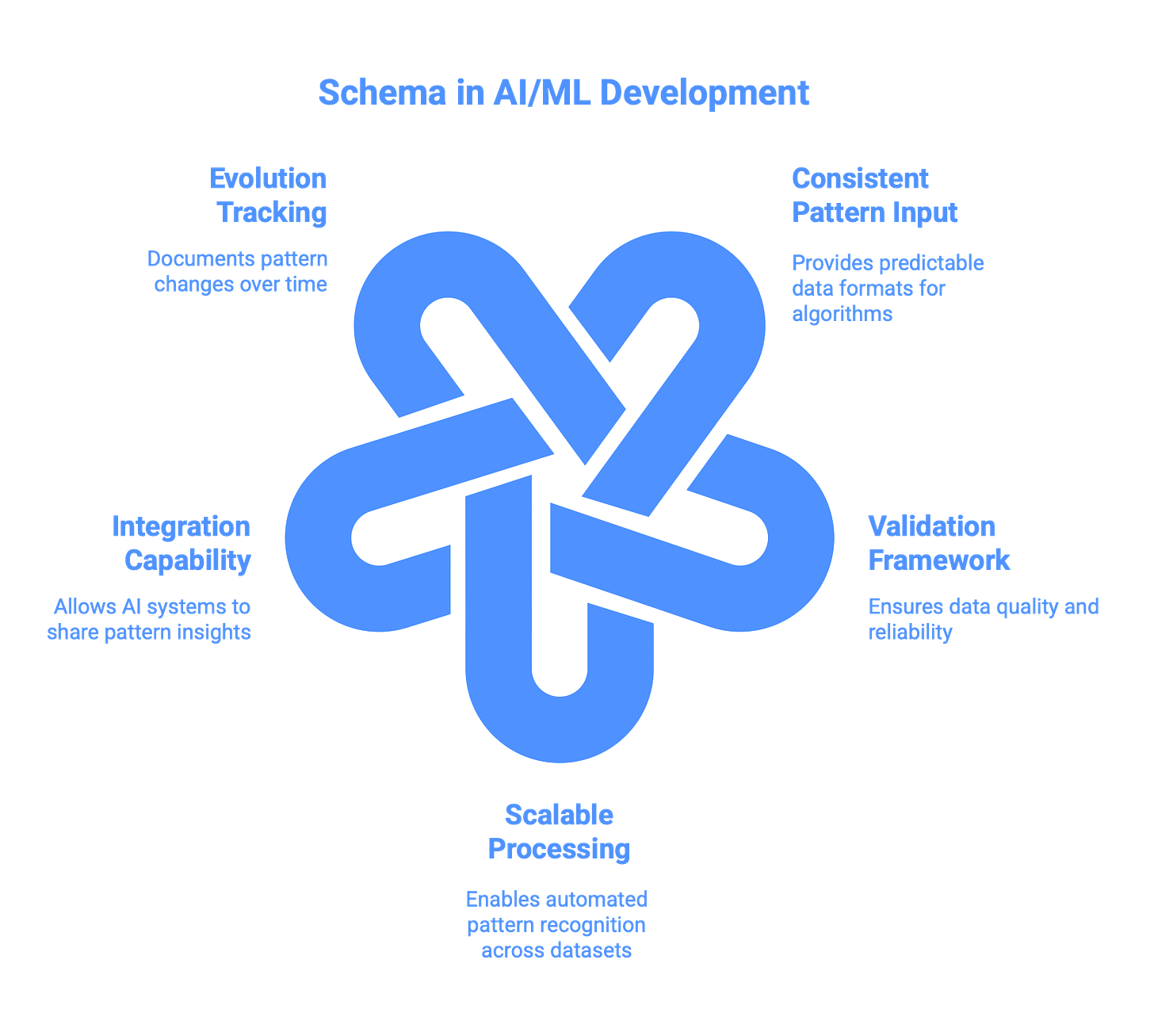

How schema Enables AI/ML Development

Effective schema design forms the foundation upon which modern AI and machine learning systems build their pattern recognition capabilities:

Consistent Pattern Input: Algorithms require predictable data formats to identify reliable patterns at scale.

Validation Framework: Like peer review in scientific research, schema validation prevents unreliable data from corrupting pattern recognition processes.

Scalable Processing: Well-designed schemas allow AI systems to process millions of data points while maintaining pattern recognition accuracy.

Integration Capability: Schema standardization enables AI ecosystems where different systems can build upon each other's discoveries.

Evolution Tracking: Documents how patterns change over time, enabling AI systems to adapt and improve their recognition capabilities.

Traditional Data Analysis

Traditional data analysis represents the scientific method applied to pattern recognition—the systematic approach to extracting meaningful insights from structured observations. Rather than being obsolete in the age of AI, traditional analysis provides the foundational methods upon which modern AI/ML systems build.

The Scientific Pattern Recognition Process

Hypothesis Formation

Traditional data analysis starts with specific questions about patterns: "Do customers purchase more during certain seasons?" or "Can we predict equipment failure based on performance metrics?" This hypothesis-driven approach ensures analysis remains focused on meaningful patterns rather than random correlations.

Systematic Observation

Traditional analysis applies rigorous observation methods borrowed directly from experimental science: controlled data collection procedures, standardized measurement techniques, consistent categorization systems, and temporal pattern tracking.

Pattern Quantification

Mathematical methods quantify observed patterns with precision through descriptive statistics, correlation analysis, regression modelling, and time series analysis.

Pattern Recognition Methods

Descriptive Analysis

Descriptive analysis represents the archaeological phase of data exploration—understanding what patterns exist in current data before attempting to predict future outcomes. These descriptive patterns become the foundation for predictive modeling, revealing the data formations most likely to contain valuable predictive patterns.

Comparative Analysis

Comparative analysis identifies differences between groups or time periods to understand pattern variations and their underlying causes. This analysis reveals not just what patterns exist, but why they exist—enabling more sophisticated AI models that can account for causal relationships.

Predictive Analysis

Predictive analysis uses historical patterns to forecast future occurrences with quantified confidence levels, forming the bridge between traditional analysis and automated AI systems.

For example, a customer churn model might identify that usage decline greater than 40% indicates 75% churn probability within 90 days, while combined factor models can achieve 92% prediction accuracy.

These predictive models form the conceptual foundation that AI/ML algorithms later automate and scale. The traditional analysis establishes the logical framework, while AI systems provide the computational power to apply these frameworks across vast datasets in real-time.